Procedure for a schoolchildren contest on environmental monitoring of the Earth from space by digital technologies

The provision of organizational, technical, and methodological support for the ‘Images of the Earth from Space’ project for high school students as part of the ‘Space Automatic Object Identification and Artificial Intelligence’ contest of the ‘Planet Watch’ programme.

Task

The exploration of the Earth from space is becoming a comprehensive global endeavour. The utilization of space technology facilitates the resolution of a multitude of contemporary issues pertaining to the Earth system (atmosphere, ocean, surface, and biosphere), including the assessment and prediction of changes in the environmental state resulting from the combined influence of natural and anthropogenic factors.

For instance, the analysis of the Earth's surface images captured by satellites in space has the potential to facilitate the resolution of a multitude of tasks in a diverse range of sectors, including industry, agriculture, forestry, transport, meteorology, and others. In parallel with the aforementioned developments, technologies for processing and analyzing this data, including open-source software tools, are also being developed. Spacecraft can be employed to monitor the movement of various forms of transport, including ships, boats, and other watercraft, as well as cars and trains, aircraft, unmanned sea vessels, and catamarans with artificial intelligence systems installed.

The importance of technologies employed for the acquisition and processing of data from space satellites was deemed high. That is why they were included in the list of thematic areas in the Planet Watch contest programme for high school students. The technologies in question are being investigated by participants as part of the Space Images of the Earth project, which forms part of the Planet Watch programme.

In their capacity as a partner university of the programme, the SPbPU staff were tasked with developing a procedure for teaching high school students the techniques of ecological monitoring of the Earth's surface.

In the initial stage of the project, students, under the guidance of mentors, should attempt to solve a number of tasks on the segmentation and classification of water-related objects on satellite images, specifically focusing on rivers and reservoirs. This will be achieved through the use of deep convolutional neural networks. Subsequently, the images from different years can be compared in order to ascertain the manner in which river channels evolve, new islands emerge, lakes become desiccated, and reservoirs become inundated.

The second stage of the contest involves participation in experiments with simulators and real control systems on unmanned catamaran vessels with control capability, as well as with automatic identification systems (AIS) on the basis of small spacecraft and with the help of AIS to trace the path of an unmanned catamaran through the sea area.

The third stage requires the participants to train a neural network for a maritime vessel detection task and produce a research paper utilizing a space image segmentation module.

Solution

The methodological support for the ‘Images of the Earth from Space’ project for high school students as part of the ‘Space Automatic Object Identification and Artificial Intelligence’ contest of the ‘Planet Watch’ programme was developed. All organizational and technical work was also carried out by the project team of the SPbPU NTI Centre.

It is possible for schoolchildren to engage in environmental monitoring of water objects and to experiment with the development of artificial intelligence systems. The project groups will have the opportunity to train a neural network to recognize water reservoirs in images. This will be achieved by working in collaboration with Polytechnic's programmers to mark up images and create a training sample. In order to achieve this objective, SPbPU experts have developed instructions for training the neural network based on satellite images and have also created training and methodological materials. The images from the Aist-2D spacecraft, provided by the Progress Rocket Space Centre, were used to create the training sample.

Furthermore, students will be able to observe the movements of vessels in the waters of the seas and oceans using the Automatic Identification System (AIS). It provides real-time information about ships in the Gulf of Finland near St. Petersburg. The receiving station is situated within the territory of Peter the Great St. Petersburg Polytechnic University.

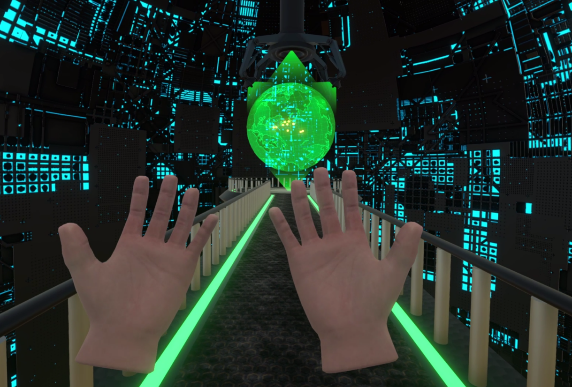

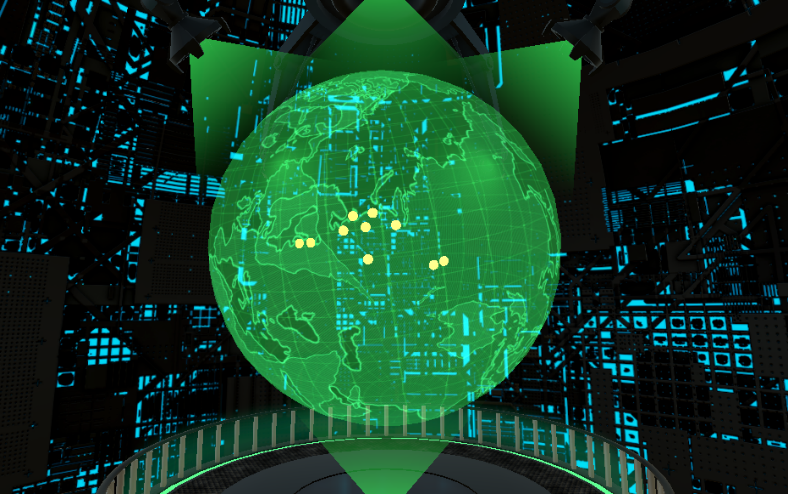

In December 2022, a virtual reality (VR) application was developed to demonstrate the unprocessed and processed satellite imagery. Thereby, the project became a real eye-catcher.

Details

Neural network training methodology

The trained neural network is capable of solving a diverse range of tasks based on the marked images.

The training sample comprises images with an ultra-high resolution, exceeding tens of thousands of pixels per image (for instance, the pixel size of one image is 36,766 by 41,282). The utilization of such images is employed to optimize the precision of the neural network's object-recognition capabilities with regard to photographic imagery.

A selection of images of regions with varying topographical characteristics is made for sampling. The selection process takes into account the ratio and configuration of anthropogenic and natural spaces on the photographed surface.

In order to facilitate the direct preparation of images for incorporation into the sample, SPbPU employees have developed an original manual markup methodology.

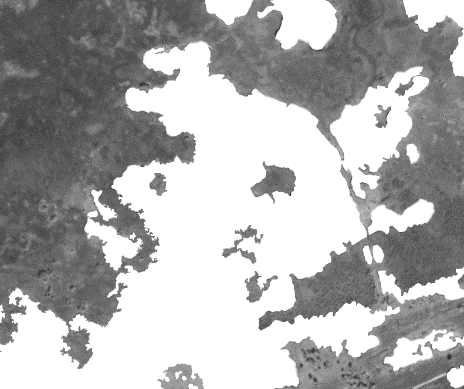

All images to be marked up are viewed and processed in grayscale colour mode. This allows frames to be perceived more quickly and easily while reducing the image weight. Markup is made manually in the Adobe Photoshop raster editor using a graphics tablet. A discrete layer corresponding to the class of the object is generated, after which the object is delineated with the stylus and the necessary area is filled. The delineation of an object according to the methodology requires the inclusion of pixels that are at least 50% related to the selected object within the boundaries of the delineation.

The methodology is based on the analysis of three classes of objects: bodies of water, rivers, and ships. The aforementioned entities possess sufficiently specific properties that allow for their identification. They are well distinguishable by colour, have clear boundaries and characteristic shapes.

Subsequently, the layers are uploaded as individual images in accordance with the designated class, whereby the objects are highlighted in white on a black background. This form is then transmitted to the neural network for training.

Example markup of the Forest class:

Automatic Identification Systems (AIS)

A set of software and hardware solutions, including the Automatic Identification System (AIS), is employed to address the challenge of monitoring the movement of ships in the maritime domain. By receiving signals from ships that use data from global navigation satellite systems, AIS enables ships around the world to safely navigate in even the busiest waters by exchanging data on their performance and position.

The project teams of the Space Automatic Object Identification and Artificial Intelligence Contest will have the opportunity to engage in experiments with simulators and real control systems on unmanned catamaran ships with control capability, as well as with Automatic Identification Systems (AIS) based on small spacecraft. The use of AIS will facilitate the tracing of the path of an unmanned catamaran through the sea area.

VR equipment

The virtual reality (VR) component of the project comprises a sci-fi style digital room that showcases raw and processed satellite images.

The software will permit young people with an interest in participating in high-tech projects to test the application independently, with the option of refining it with the support of mentors from the Industrial Systems for Streaming Data Processing Laboratory of the SPbPU NTI Centre. Consequently, the technology stack of the ‘Images of Earth from Space’ project has been augmented with 3D modelling and virtual reality system construction technologies.

Using a VR application evokes a lot of positive emotions and, of course, increases interest in the project. When wearing a VR headset, the user is removed from the external environment, and their attention is solely directed towards the acquisition of knowledge.

If users don't have a VR kit, they can use the PC version of the app with low system requirements and a simplified command entry system.

The creation of space and other elements of the environment was completed using the Blender 3D computer graphics software. The other elements (lights, sound effects, and interactivity) are implemented in the Unity environment with the use of the C# programming language.

Technologies

| Programming languages | С++, Python, С |

| OS | Windows 10+, Linux, Mac OS |

| Libraries and frameworks | OpenCV, PyTorch, ONNX Runtime, Qt |

| 3D development environment | Blender, Substance 3D Painter, Marmoset Toolbag |

| Software development environment | Unity, Visual Studio, JetBrains Rider, SteamVR |

| Architectures | x64 |

| CVS | Git (GitLab) |

| IDE | vscode |

Intellectual Property

Project team

- Technical director: A.V. Leksashov

- Scientific director: M.V. Bolsunovskaya

Contractor

- Tetrakub LLC

Partners

- Roscosmos State Corporation

- Progress Rocket Space Centre

- Industrial Systems for Streaming Data Processing Laboratory, NTI Center, SPbPU