The ISSDP laboratory of the NTI SPbPU Center is completing the 2nd stage of the development of a small-sized training and demonstration model of an unmanned vehicle

The design team of the Industrial Data Streaming Systems Laboratory of the NTI SPbPU Center is running successful testing of the model of an unmanned vehicle in a virtual environment and plans to complete the 2nd stage of its development in June 2020.

The device was created as a training and demonstration platform for teaching technologies for the autonomous transport system construction. By virtue of the model, students of technical universities and specialists in related disciplines seeking to improve their skills should be able to study the entire arsenal of technologies currently used by designers developing ADAS (Advanced Driver Assistance Systems).

The design project is implemented in cooperation with the SPbPU Center for Computer Engineering (CompMechLab) as part of the demonstration training ground (TestBed) for new production technologies and holding TestBed-based educational events for instruction and advanced training of scientific and engineering personnel, and the presentation of state-of-the-art developments and competencies in the field of new production technologies for government officials and representatives of industrial enterprises, small and medium-sized businesses. The developed model will become part of the material and technical unit of the training ground, the opening of which is scheduled for 2020 (r. G.3.56 NIK SPbPU). The TestBed team of the Strategic Development of Engineering Markets Scientific Laboratory of the NTI Center leads the project.

The model presents all main types of hardware and software components engaged in the design of real unmanned vehicles. All modules correspond to advanced developments in this industry and are made using technical vision technologies, machine learning, simulation, etc. As shown by surveys carried out before the start of work, Russia yet has no analogues of a training and demonstration complex of this level.

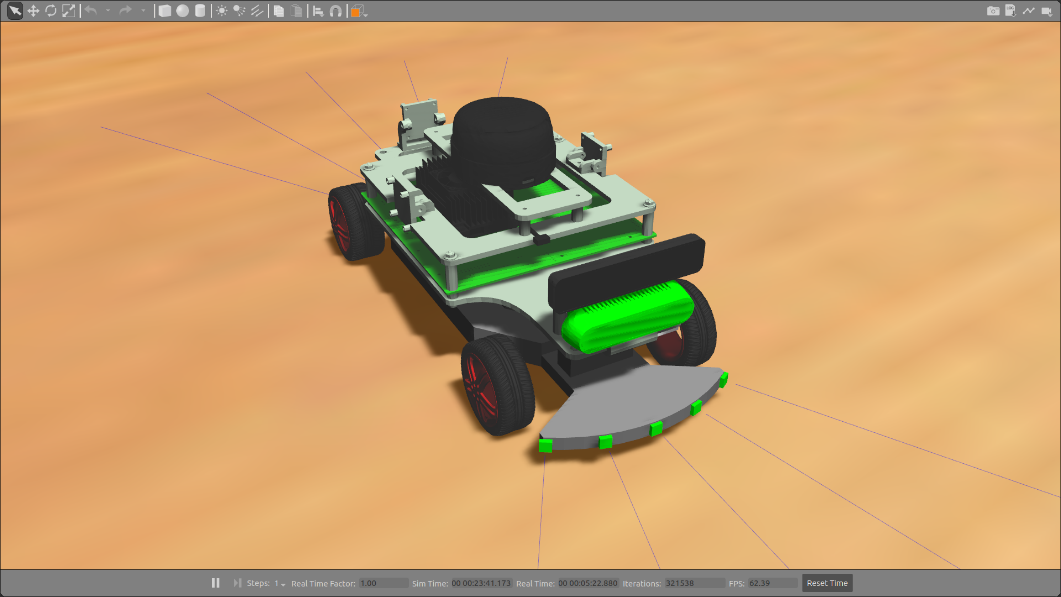

The hardware and software platform is based on the NVidia Jetson TX2 microcomputer and the deeply modified Traxxass 4-Tec 2.0 chassis. The set of standard sensors includes: encoders, 10 high-precision range sensors, a lidar, depth camera, tracking camera, 4 industrial video cameras. The software based on the ROS Melodic framework includes blocks for mapping, navigation, odometry, motion control, communication between modules, as well as integration with hardware under the control of an STM controller. The software modules that collect and process information from sensors employ technologies of technical vision (collecting and combining images from cameras), machine learning (pedestrian recognition algorithms) and artificial intelligence (calculating the route for avoiding dynamic objects / passing up barriers in an intelligent control system).

Due to the listed set of components, the model will be able to:

- Draw maps and read them

- Search for the shortest route to a point on the map

- Skirt dynamic barriers

- Recognize the various signs and attributes of the road environment (markings, traffic signs, pedestrians, other vehicles) and take actions in connection with those

- Make overview images (surround view, bird view, orbital view, etc.).

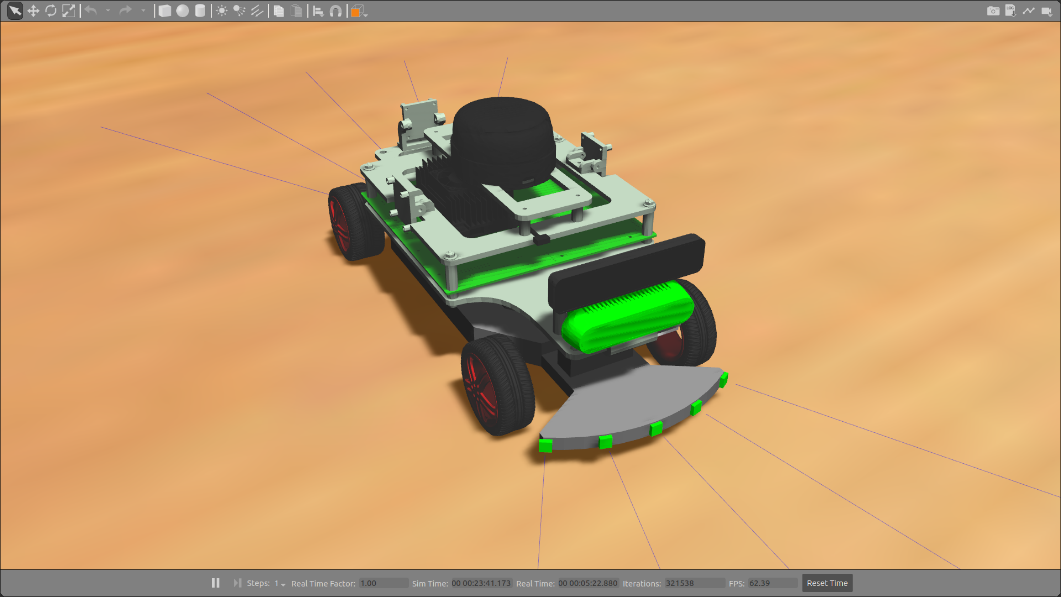

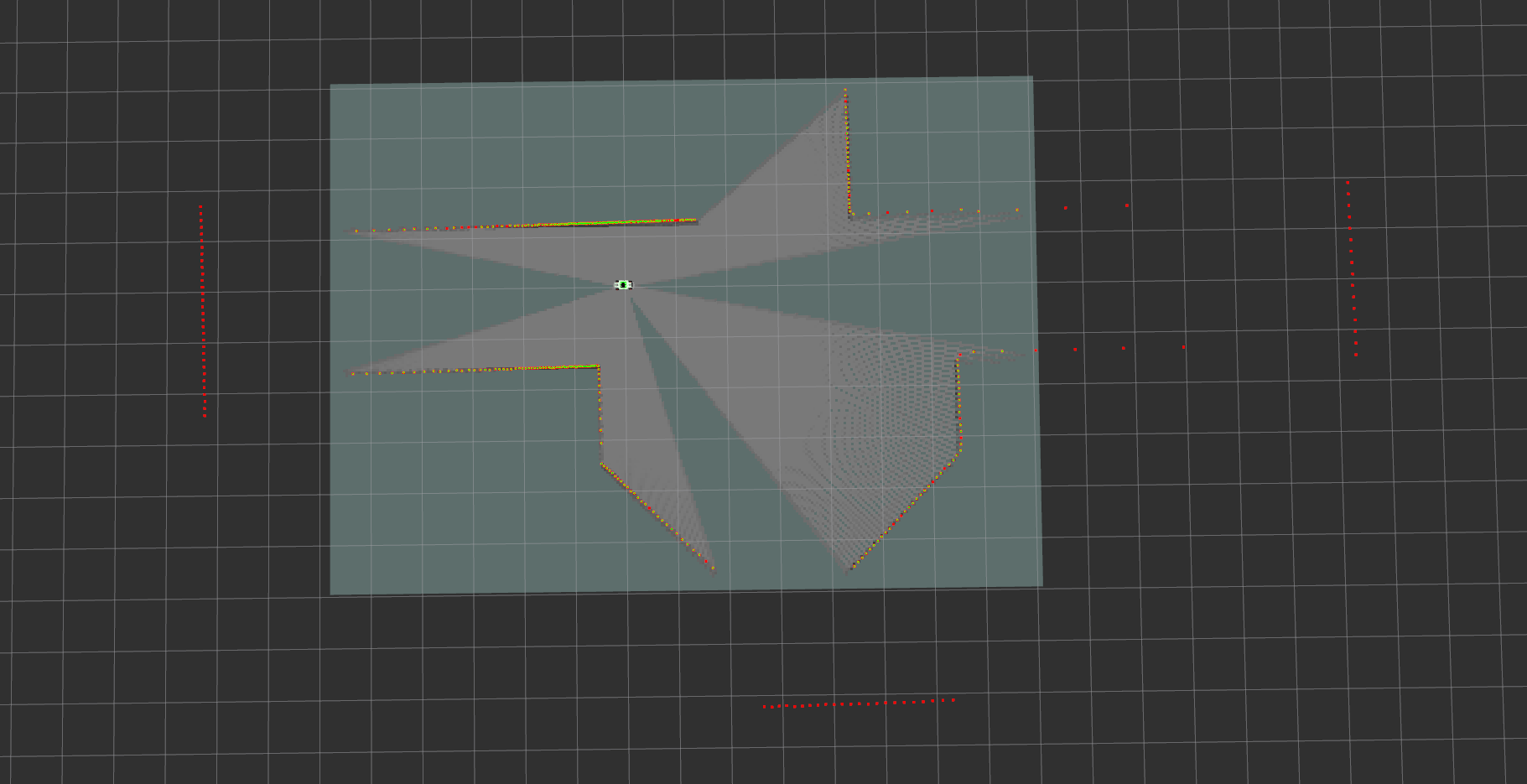

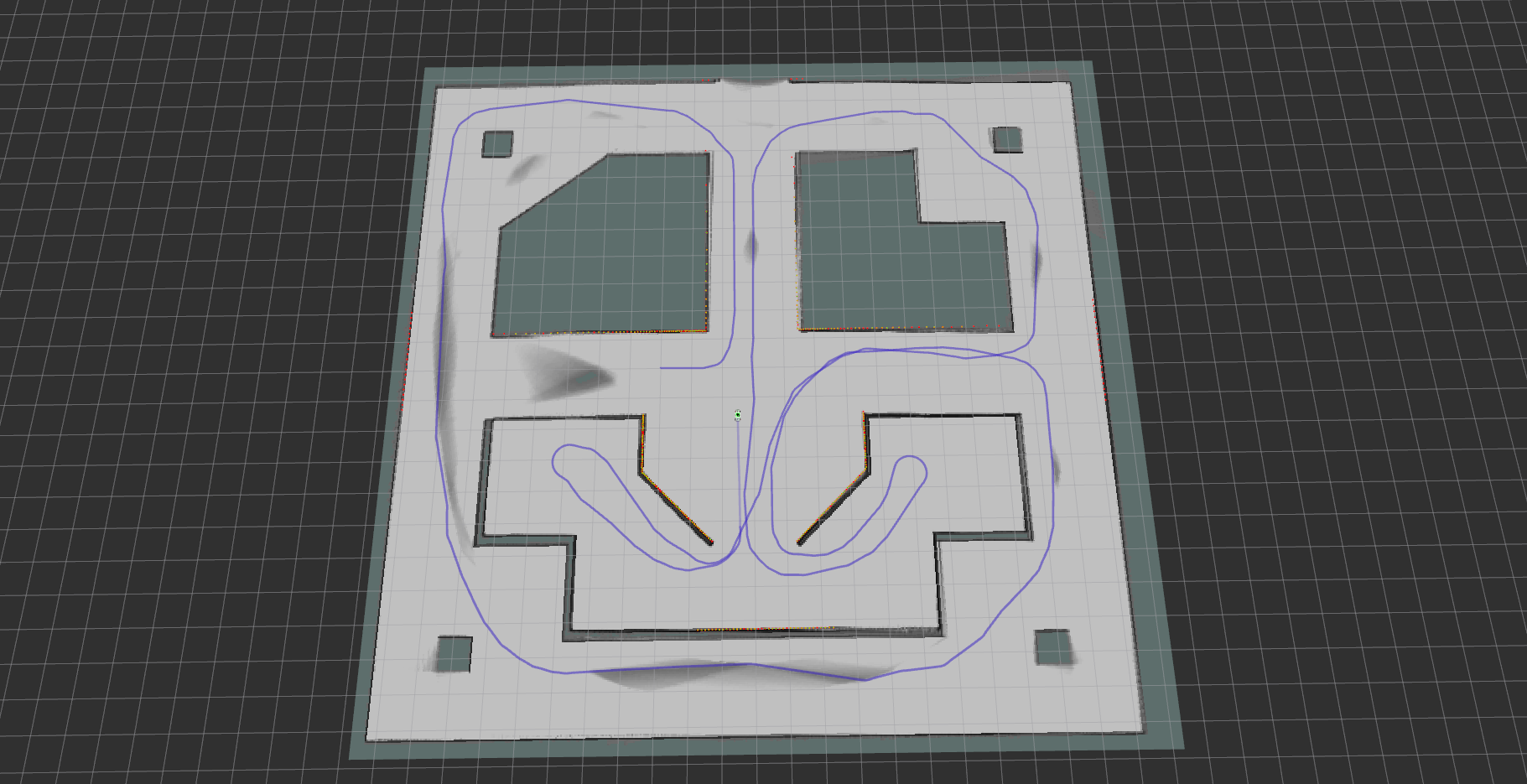

For debugging the software, simulation technology is used, which can significantly speed up the development process. A virtual model of the device with all sensors was created in the Gazebo simulator; currently, the model is under testing in a number of virtual spaces with barriers. Preliminary tests showed that the software of the respective blocks completely fulfilled the tasks.

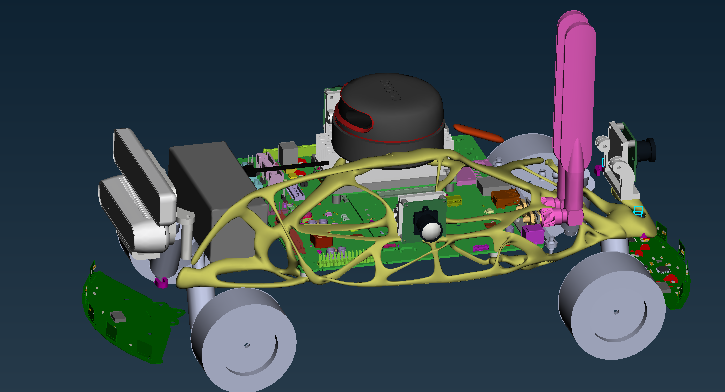

To improve the technical characteristics of the platform, it was agreed to finalize the design of the model chassis in cooperation with the specialists of the SPbPU Center for Computer Engineering (CompMechLab).

Employees of the Engineering Center made calculations of the current chassis design, in which the turning circle is under 80 cm. To accomplish this, the steering gear of the front axle (steering knuckles, rods, levers) was reworked, due to which the angle of rotation of the front wheels increased from 30 to 45 degrees, and rear suspension changed, making the rear axle also controllable with an angle of rotation of 20 degrees and a separate servo.

Therefore, not only was reduced the turning radius of the model but also increased the flexibility in controlling the platform, since at high speeds one only can use the rotation of the front axle, thereby increasing stability, and connect the rear axle to the control at low speeds to improve maneuverability.

The upper deck (transparent plexiglass structure) will later be replaced by a deck engineered with the use of bionic design approaches. At the moment, employees of the Engineering Center have completed measurements and begun to develop the deck.

Pilot body model with a deck engineered using bionic design approaches

The modularity of the design allows remaking the device for various missions. The software part is coded on the basis of open source software to provide for the freedom of further modification of the device. The platform is easy to operate due to its small dimensions (40x25cm) and a small turning radius (under 40 cm).

The development of a test sample (stage 1) is completed. Currently, work is being done within the frame of stage 2, which presumes the creation of a prototype model with the possibility of area mapping, navigation, orientation and barrier avoidance; the completion of the work is scheduled for June 2020. Stage 3, which is scheduled for completion in 2021, includes the development of a commercial platform model and the implementation of ADAS.

Virtual model in the Gazebo simulator

The initial map of the area in the Gazebo simulator simulating a physical environment with barriers for model passing through

The area map created by the model using lidar data after switching on, prior to the start of movement

An area map constructed by a model using lidar data and a mapping block (cartographer) after the traversal